Ziheng Wang

MoCha:End-to-End Video Character Replacement without Structural Guidance

Jan 14, 2026Abstract:Controllable video character replacement with a user-provided identity remains a challenging problem due to the lack of paired video data. Prior works have predominantly relied on a reconstruction-based paradigm that requires per-frame segmentation masks and explicit structural guidance (e.g., skeleton, depth). This reliance, however, severely limits their generalizability in complex scenarios involving occlusions, character-object interactions, unusual poses, or challenging illumination, often leading to visual artifacts and temporal inconsistencies. In this paper, we propose MoCha, a pioneering framework that bypasses these limitations by requiring only a single arbitrary frame mask. To effectively adapt the multi-modal input condition and enhance facial identity, we introduce a condition-aware RoPE and employ an RL-based post-training stage. Furthermore, to overcome the scarcity of qualified paired-training data, we propose a comprehensive data construction pipeline. Specifically, we design three specialized datasets: a high-fidelity rendered dataset built with Unreal Engine 5 (UE5), an expression-driven dataset synthesized by current portrait animation techniques, and an augmented dataset derived from existing video-mask pairs. Extensive experiments demonstrate that our method substantially outperforms existing state-of-the-art approaches. We will release the code to facilitate further research. Please refer to our project page for more details: orange-3dv-team.github.io/MoCha

End-to-End Video Character Replacement without Structural Guidance

Jan 13, 2026Abstract:Controllable video character replacement with a user-provided identity remains a challenging problem due to the lack of paired video data. Prior works have predominantly relied on a reconstruction-based paradigm that requires per-frame segmentation masks and explicit structural guidance (e.g., skeleton, depth). This reliance, however, severely limits their generalizability in complex scenarios involving occlusions, character-object interactions, unusual poses, or challenging illumination, often leading to visual artifacts and temporal inconsistencies. In this paper, we propose MoCha, a pioneering framework that bypasses these limitations by requiring only a single arbitrary frame mask. To effectively adapt the multi-modal input condition and enhance facial identity, we introduce a condition-aware RoPE and employ an RL-based post-training stage. Furthermore, to overcome the scarcity of qualified paired-training data, we propose a comprehensive data construction pipeline. Specifically, we design three specialized datasets: a high-fidelity rendered dataset built with Unreal Engine 5 (UE5), an expression-driven dataset synthesized by current portrait animation techniques, and an augmented dataset derived from existing video-mask pairs. Extensive experiments demonstrate that our method substantially outperforms existing state-of-the-art approaches. We will release the code to facilitate further research. Please refer to our project page for more details: orange-3dv-team.github.io/MoCha

UniCorn: Towards Self-Improving Unified Multimodal Models through Self-Generated Supervision

Jan 08, 2026Abstract:While Unified Multimodal Models (UMMs) have achieved remarkable success in cross-modal comprehension, a significant gap persists in their ability to leverage such internal knowledge for high-quality generation. We formalize this discrepancy as Conduction Aphasia, a phenomenon where models accurately interpret multimodal inputs but struggle to translate that understanding into faithful and controllable synthesis. To address this, we propose UniCorn, a simple yet elegant self-improvement framework that eliminates the need for external data or teacher supervision. By partitioning a single UMM into three collaborative roles: Proposer, Solver, and Judge, UniCorn generates high-quality interactions via self-play and employs cognitive pattern reconstruction to distill latent understanding into explicit generative signals. To validate the restoration of multimodal coherence, we introduce UniCycle, a cycle-consistency benchmark based on a Text to Image to Text reconstruction loop. Extensive experiments demonstrate that UniCorn achieves comprehensive and substantial improvements over the base model across six general image generation benchmarks. Notably, it achieves SOTA performance on TIIF(73.8), DPG(86.8), CompBench(88.5), and UniCycle while further delivering substantial gains of +5.0 on WISE and +6.5 on OneIG. These results highlight that our method significantly enhances T2I generation while maintaining robust comprehension, demonstrating the scalability of fully self-supervised refinement for unified multimodal intelligence.

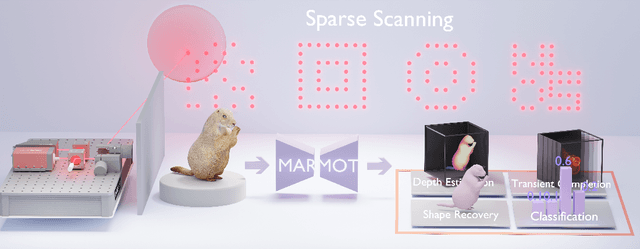

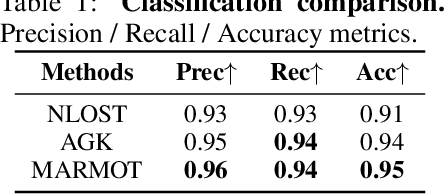

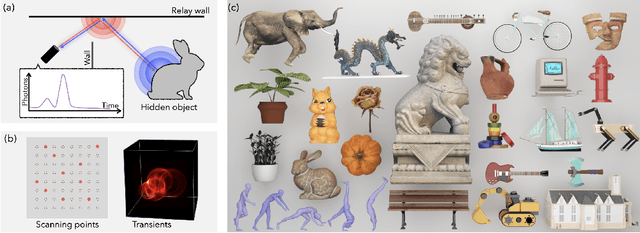

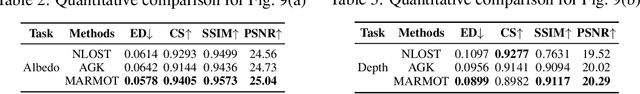

MARMOT: Masked Autoencoder for Modeling Transient Imaging

Jun 10, 2025

Abstract:Pretrained models have demonstrated impressive success in many modalities such as language and vision. Recent works facilitate the pretraining paradigm in imaging research. Transients are a novel modality, which are captured for an object as photon counts versus arrival times using a precisely time-resolved sensor. In particular for non-line-of-sight (NLOS) scenarios, transients of hidden objects are measured beyond the sensor's direct line of sight. Using NLOS transients, the majority of previous works optimize volume density or surfaces to reconstruct the hidden objects and do not transfer priors learned from datasets. In this work, we present a masked autoencoder for modeling transient imaging, or MARMOT, to facilitate NLOS applications. Our MARMOT is a self-supervised model pretrianed on massive and diverse NLOS transient datasets. Using a Transformer-based encoder-decoder, MARMOT learns features from partially masked transients via a scanning pattern mask (SPM), where the unmasked subset is functionally equivalent to arbitrary sampling, and predicts full measurements. Pretrained on TransVerse-a synthesized transient dataset of 500K 3D models-MARMOT adapts to downstream imaging tasks using direct feature transfer or decoder finetuning. Comprehensive experiments are carried out in comparisons with state-of-the-art methods. Quantitative and qualitative results demonstrate the efficiency of our MARMOT.

TimeZero: Temporal Video Grounding with Reasoning-Guided LVLM

Mar 17, 2025Abstract:We introduce TimeZero, a reasoning-guided LVLM designed for the temporal video grounding (TVG) task. This task requires precisely localizing relevant video segments within long videos based on a given language query. TimeZero tackles this challenge by extending the inference process, enabling the model to reason about video-language relationships solely through reinforcement learning. To evaluate the effectiveness of TimeZero, we conduct experiments on two benchmarks, where TimeZero achieves state-of-the-art performance on Charades-STA. Code is available at https://github.com/www-Ye/TimeZero.

TransiT: Transient Transformer for Non-line-of-sight Videography

Mar 14, 2025

Abstract:High quality and high speed videography using Non-Line-of-Sight (NLOS) imaging benefit autonomous navigation, collision prevention, and post-disaster search and rescue tasks. Current solutions have to balance between the frame rate and image quality. High frame rates, for example, can be achieved by reducing either per-point scanning time or scanning density, but at the cost of lowering the information density at individual frames. Fast scanning process further reduces the signal-to-noise ratio and different scanning systems exhibit different distortion characteristics. In this work, we design and employ a new Transient Transformer architecture called TransiT to achieve real-time NLOS recovery under fast scans. TransiT directly compresses the temporal dimension of input transients to extract features, reducing computation costs and meeting high frame rate requirements. It further adopts a feature fusion mechanism as well as employs a spatial-temporal Transformer to help capture features of NLOS transient videos. Moreover, TransiT applies transfer learning to bridge the gap between synthetic and real-measured data. In real experiments, TransiT manages to reconstruct from sparse transients of $16 \times 16$ measured at an exposure time of 0.4 ms per point to NLOS videos at a $64 \times 64$ resolution at 10 frames per second. We will make our code and dataset available to the community.

EquiBench: Benchmarking Code Reasoning Capabilities of Large Language Models via Equivalence Checking

Feb 18, 2025

Abstract:Equivalence checking, i.e., determining whether two programs produce identical outputs for all possible inputs, underpins a broad range of applications, including software refactoring, testing, and optimization. We present the task of equivalence checking as a new way to evaluate the code reasoning abilities of large language models (LLMs). We introduce EquiBench, a dataset of 2400 program pairs spanning four programming languages and six equivalence categories. These pairs are systematically generated through program analysis, compiler scheduling, and superoptimization, covering nontrivial structural transformations that demand deep semantic reasoning beyond simple syntactic variations. Our evaluation of 17 state-of-the-art LLMs shows that OpenAI o3-mini achieves the highest overall accuracy of 78.0%. In the most challenging categories, the best accuracies are 62.3% and 68.8%, only modestly above the 50% random baseline for binary classification, indicating significant room for improvement in current models' code reasoning capabilities.

Solving the long-tailed distribution problem by exploiting the synergies and balance of different techniques

Jan 23, 2025

Abstract:In real-world data, long-tailed data distribution is common, making it challenging for models trained on empirical risk minimisation to learn and classify tail classes effectively. While many studies have sought to improve long tail recognition by altering the data distribution in the feature space and adjusting model decision boundaries, research on the synergy and corrective approach among various methods is limited. Our study delves into three long-tail recognition techniques: Supervised Contrastive Learning (SCL), Rare-Class Sample Generator (RSG), and Label-Distribution-Aware Margin Loss (LDAM). SCL enhances intra-class clusters based on feature similarity and promotes clear inter-class separability but tends to favour dominant classes only. When RSG is integrated into the model, we observed that the intra-class features further cluster towards the class centre, which demonstrates a synergistic effect together with SCL's principle of enhancing intra-class clustering. RSG generates new tail features and compensates for the tail feature space squeezed by SCL. Similarly, LDAM is known to introduce a larger margin specifically for tail classes; we demonstrate that LDAM further bolsters the model's performance on tail classes when combined with the more explicit decision boundaries achieved by SCL and RSG. Furthermore, SCL can compensate for the dominant class accuracy sacrificed by RSG and LDAM. Our research emphasises the synergy and balance among the three techniques, with each amplifying the strengths of the others and mitigating their shortcomings. Our experiment on long-tailed distribution datasets, using an end-to-end architecture, yields competitive results by enhancing tail class accuracy without compromising dominant class performance, achieving a balanced improvement across all classes.

Surgical Visual Understanding (SurgVU) Dataset

Jan 16, 2025

Abstract:Owing to recent advances in machine learning and the ability to harvest large amounts of data during robotic-assisted surgeries, surgical data science is ripe for foundational work. We present a large dataset of surgical videos and their accompanying labels for this purpose. We describe how the data was collected and some of its unique attributes. Multiple example problems are outlined. Although the dataset was curated for a particular set of scientific challenges (in an accompanying paper), it is general enough to be used for a broad range machine learning questions. Our hope is that this dataset exposes the larger machine learning community to the challenging problems within surgical data science, and becomes a touchstone for future research. The videos are available at https://storage.googleapis.com/isi-surgvu/surgvu24_videos_only.zip, the labels at https://storage.googleapis.com/isi-surgvu/surgvu24_labels_updated_v2.zip, and a validation set for tool detection problem at https://storage.googleapis.com/isi-surgvu/cat1_test_set_public.zip.

Synthetic Data Generation for Residential Load Patterns via Recurrent GAN and Ensemble Method

Oct 20, 2024Abstract:Generating synthetic residential load data that can accurately represent actual electricity consumption patterns is crucial for effective power system planning and operation. The necessity for synthetic data is underscored by the inherent challenges associated with using real-world load data, such as privacy considerations and logistical complexities in large-scale data collection. In this work, we tackle the above-mentioned challenges by developing the Ensemble Recurrent Generative Adversarial Network (ERGAN) framework to generate high-fidelity synthetic residential load data. ERGAN leverages an ensemble of recurrent Generative Adversarial Networks, augmented by a loss function that concurrently takes into account adversarial loss and differences between statistical properties. Our developed ERGAN can capture diverse load patterns across various households, thereby enhancing the realism and diversity of the synthetic data generated. Comprehensive evaluations demonstrate that our method consistently outperforms established benchmarks in the synthetic generation of residential load data across various performance metrics including diversity, similarity, and statistical measures. The findings confirm the potential of ERGAN as an effective tool for energy applications requiring synthetic yet realistic load data. We also make the generated synthetic residential load patterns publicly available.

* 12 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge